Last May, a fake image of a Pentagon explosion sent ripples through US stock markets, highlighting DeepSwap and DeepFake risks of GenAI.

Apparently, an AI-generated image of what appeared to be a blast outside of the Pentagon made its way through Twitter, but before authorities could announce that this was a hoax, the stock market was trading 0.26 percent lower. Millions were lost for nothing.

The GenAI image was close enough to make it appear real but upon closer inspection, it shows disparities in the building column widths. As GenAI continues to develop, DeepFakes will be harder, if not impossible to spot.

Read: How fake chatbots are misleading users to make profits

DeepSwap

DeepSwap technology has genuine business uses that can transform advertising, entertainment, and virtual reality. You can star in your own virtual reality show where you mix and mingle with celebrities, dead and alive, adding a measure of personalization and immersive experiences never possible before.

The tech can also have more sinister usages.

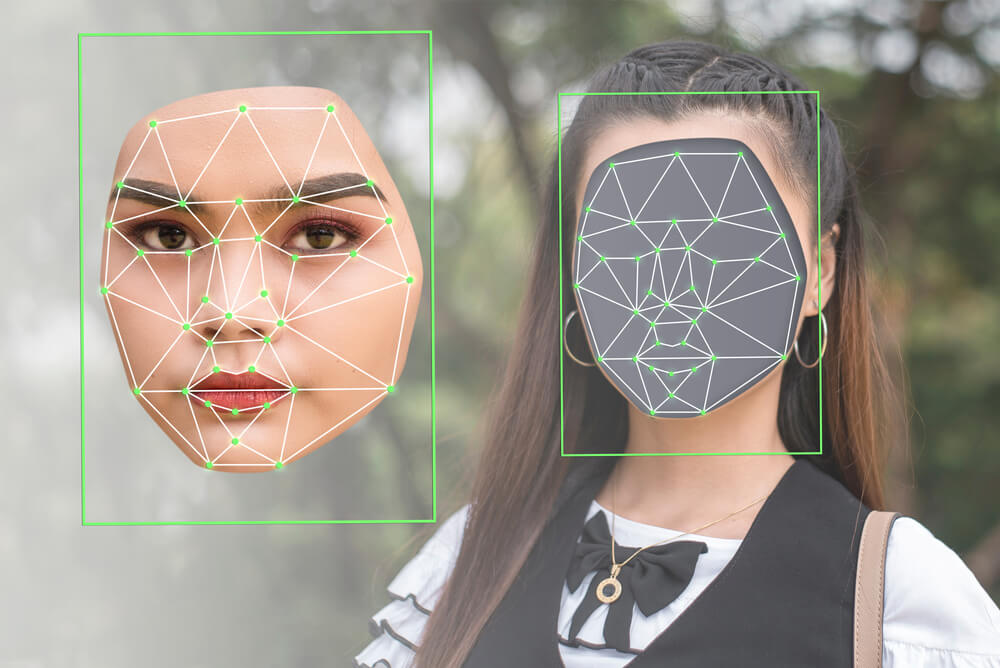

New contrivances are surfacing and modifying the way we edit and manipulate images and videos. DeepSwap, is an innovative new web-based tool that uses deep learning AI technology to swap the faces of two people in a picture or video.

The AI face generator uses deep neural networks and is characterized by a user-friendly design that makes it accessible to all types of people.

The technology behind DeepSwap is able to detect and analyze facial features with high accuracy, enabling the tool to swap faces in a way that looks natural and seamless.

Did you know that Taylor is now giving tennis lessons? Did you watch former U.S. president Donald Trump falling down while being arrested? These events never happened but the Trump illusion using a combination of DeepSwap and DeepFake did the trick piling up nearly 5 million views when the fake video was shown.

Deepfake technology has made headlines due to its abuse in distributing disinformation and continuing cybercrime. The tech could depict innocent personalities saying racist remarks, or disseminating political propaganda. Tip of the iceberg, really.

DeepFakes

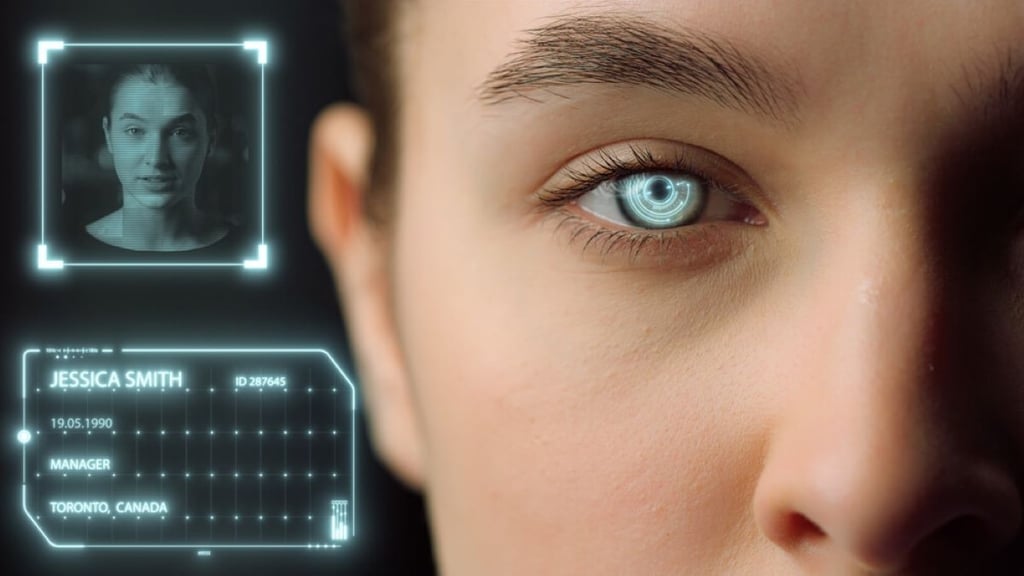

Deepfakes use AI to generate a completely new video or audio and end up displaying something that didn’t actually occur in reality.

A DeepFake happens when footage is generated by a computer that has been trained through countless existing images.

The most common type for creating DeepFakes relies on first using a target video as the basis of the DeepFake and then a collection of video clips of the person you want to insert in the target.

Mixing this up with what is known as Generative Adversarial Networks (GANs), improves any flaws in the DeepFake using multiple rounds of fixes until it is really hard for deepfake detectors to decode them.

Detecting a DeepFake

There are indicators that detect DeepFakes including when certain details appear blurry or obscure. Problems with skin or hair, or faces that seem to be blurrier than the environment in which they’re positioned. Unnatural lighting could be a giveaway, but also words or sounds that fail to match up with the visuals and this happens when the original audio is not as carefully manipulated.

One should look at the reliability of the video source, as well. Some companies like Sensity, for example, have developed a DeepFake antivirus that alerts users via email when they’re watching something that looks AI-generated.

Microsoft President Brad Smith expressed concern recently that people should know when realistic DeepFakes are used to promote false content.

“We need to take steps to protect against the alteration of legitimate content with an intent to deceive or defraud people through the use of AI,” Smith said.

He urged the use of a “Know Your Customer”-style system for developers of powerful AI models to keep track of the use of the technology and to let the public be aware of what content AI is creating allowing people to identify fake videos.

For more on tech news, click here.