Advertising is a powerful tool to make us buy things that sometimes we don’t even need. Marketers have long used it to often send subliminal messages that make us open that wallet or charge that credit card. A thick sauce-drenched burger or a fresh thirst-quenching drink will usually do the trick. Influence Engineering and Emotion AI are taking that to the next level.

Influence engineering (IE) is the practice of creating algorithms that are designed to influence our user choices, aimed at promoting specific online behaviors using a technology expected to significantly impact digital advertising.

Influence engineering is directly related to emotion AI, or the use of artificial intelligence to detect nuances in our sentiments, text messages, video, faces, and voices and interpret their meanings to better understand and manage our likes and dislikes.

Read: Sheikh Hamdan bin Mohammed announces opening of the Dubai Centre for Artificial Intelligence

How does Influence Engineering (IE) work?

Companies today collect data on user behavior and buying preferences to gain behavioral insights allowing them to create targeted messages and experiences that influence our decision-making processes. Using personalization, social proof that products or solutions worked on others, and scarcity or uniqueness of such products, are all persuasion strategies used as marketing techniques to influence our purchasing behaviors.

Powered by AI, IE includes utilizing techniques that can better analyze our sentiments, voices, and facial expressions towards boosting marketing opportunities.

Sentiment analysis or opinion mining is a natural language processing (NLP) technique that groups user/customer feedback (reviews) as positive, negative, or neutral, in order to gain insights into customer needs.

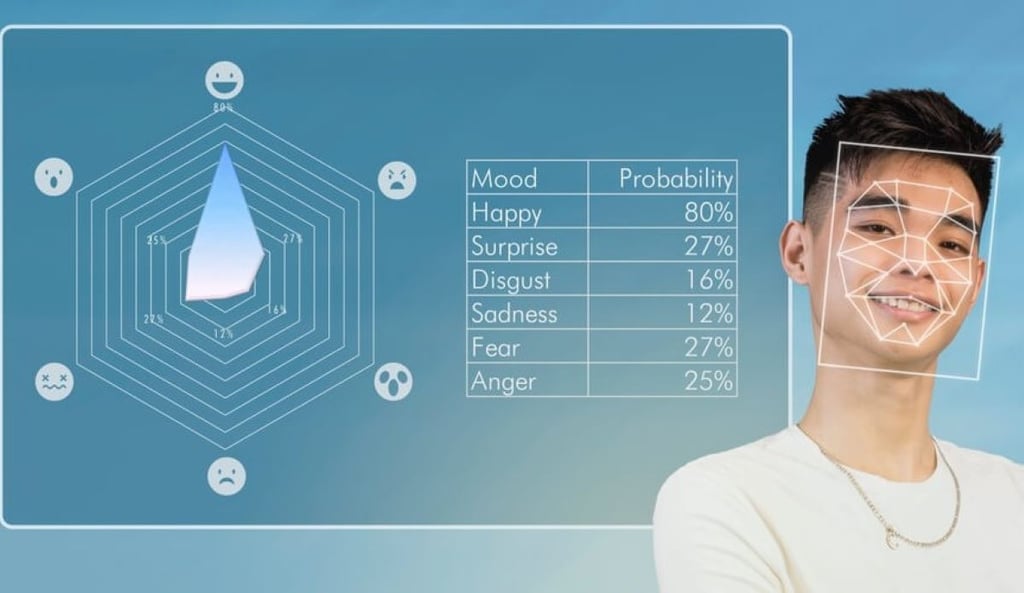

Facial Expression Recognition uses computer vision algorithms, systems that discern the edges that form an object which are very similar to the human visual system, in order to detect and analyze facial movements and expressions allowing marketers to understand an individual’s emotional state.

Voice AI analyses, measures and quantifies emotions in the human voice and detects negative, neutral or positive feelings in relation to specific subjects, products or events.

IE helps businesses develop personalized products and services, optimize store layouts and online displays, categorize customer moods, and reactions, and provide more empathetic interactions that improve customer satisfaction.

How does Emotion AI work?

IE and emotion AI both aim to understand and influence human behavior, only the latter is more focused on emotional intelligence.

Searches for emotion AI have increased by 525% over the past 5 years. In 2022, the emotion detection and recognition (EDR) market, which utilizes emotion AI to accurately identify, process, and replicate human emotions and feelings, was valued at $39.63 billion and is projected to grow at 17% yearly to reach$136.46 billion by 2030.

A few Emotion AI startups like Beyond Verbal, Evrmore, and Emotech are trending and growth in the field is due to numerous practical applications that can capture a range of feelings and reduce the gap between humans and machines.

Needing data to understand users’ emotions, Emotion AI uses activities on social media, speech and actions in video recordings, physiological sensors in devices, and more.

Detection technology identifies relevant features impacting emotions such as the case with eyebrow movement, mouth shape, and eye gaze to determine if a person is happy, sad, angry, excited or disappointed. When it comes to voice, pitch, volume, and tempo in speech-based emotion detection techniques can conclude if a person is excited, frustrated, or bored.

The data is used to train a machine learning algorithm that can accurately predict the emotional states of users and deploy Emotion AI to improve user experience and increase sales using better more individualized and richer content such as in advertising.

Artificial Emotional Intelligence

If humans make decisions based on emotions, in all likelihood, these will result in erroneous actions. In comes Emotion AI which can also assist in applying mindful judgment and making the right call, by using artificial empathy, technology’s version of emotional intelligence, aka EQ.

Emotion AI incorporates artificial empathy into machines to build smart products that can understand and respond to human emotions effectively.

An application can be programmed to analyze the voice of a patient and detect if that person suffers from Parkinson’s disease. In e-gaming, developers can deploy artificial empathy to create human-like characters that match a player’s emotions.

Researchers are exploring how to simulate EQ in machines themselves and evoke abilities like empathy and compassion, to be used in further understanding of human emotions and a better alignment of strategies around them.

But it’s a tough task. Modeling the range of human emotion requires integrating psychology, neuroscience, sociology, and culture with code.

Current technology allows Emotion AI assistants become more relatable and sensitive to human feelings, and there are many health benefits associate with this, other than improving content and customer satisfaction with products and services.

Emotion AI health benefits

Emotion AI counselors with artificial empathy could make patients feel truly understood and pave the way for mental health products geared to better diagnosis and treatment.

A market does exist for mental health apps that can detect early signs of mental health or depression and monitor a user’s emotional state and provide real-time feedback, enabling early intervention.

Accident prevention while driving

With about 1.5 billion vehicles registered in the world, road accidents are bound to happen and humans’ mental and emotional state is usually a key factor behind these crashes. According to a survey, there are 11.7 deaths per 100,000 people in motor vehicle crashes in the U.S. alone.

Using sensors, several Emotion AI applications are available to monitor the driver’s state and detect signs of stress, frustration, or fatigue. The technology is able to adjust the car’s temperature settings, ambient lighting or genre of music to stimulate and focus the driver on his task and prevent distractions and accidents.

For more tech news, click here.